A little lobster sparks a frenzy in the tech world—are humans ready to "flip the table"?

Author: Jia Tianrong, “IT Times” (ID: vittimes)

A lobster has ignited the global tech community.

From Clawdbot to Moltbot, and now to OpenClaw, in just a few weeks, this AI agent has completed a “triple jump” in technological influence through name iterations.

In recent days, it has sparked a “intelligent agent tsunami” in Silicon Valley, garnering 100,000 GitHub stars and ranking among the hottest AI applications. With just an outdated Mac mini or even an old phone, users can run a “listening, thinking, working” AI assistant.

On the internet, a creative frenzy around it has already begun. From scheduling, smart stock trading, podcast production to SEO optimization, developers and tech enthusiasts are building various applications with it. The era of everyone having a “Jarvis” seems within reach. Major domestic and international companies are also starting to follow suit, deploying similar intelligent agent services.

But beneath the lively surface, anxiety is spreading.

On one side are slogans of “productivity equality,” and on the other side remains the still-uncrossable digital divide: environment setup, dependency installation, permission configurations, frequent errors, and more.

During testing, reporters found that just the installation process could take hours, blocking many ordinary users. “Everyone says it’s good, but I can’t even get in,” has become the first frustration for many tech novices.

Deeper unease stems from the “agency” it has been given.

If your “Jarvis” begins to mistakenly delete files, call your credit card without permission, be induced to execute malicious scripts, or even be injected with attack commands while online—would you still dare to leave your computer with such an agent?

AI development is surpassing human imagination. Hu Xia, leading scientist at Shanghai Artificial Intelligence Laboratory, believes that in the face of unknown risks, “intrinsic safety” is the ultimate answer, and humans need to accelerate building the ability to “flip the table” at critical moments.

Regarding the capabilities and risks of OpenClaw—what is real, what is exaggerated? Is it safe for ordinary users to use now? How does the industry evaluate this product, called “the greatest AI application so far”?

To clarify these questions, “IT Times” interviewed deep users of OpenClaw and several technical experts, aiming to answer from different perspectives: how far has OpenClaw really come?

1. The product closest to the imagination of an intelligent agent

Multiple interviewees gave highly consistent judgments: from a technical perspective, OpenClaw is not a disruptive innovation, but it is currently the product closest to the public’s concept of an “intelligent agent.”

“An intelligent agent has finally reached a critical milestone from quantitative to qualitative change.” Ma Zeyu, deputy director of the Artificial Intelligence Research and Evaluation Department at Shanghai Software Technology Development Center, believes that OpenClaw’s breakthrough is not due to a disruptive technology but a key “qualitative change”: it allows an agent to perform complex tasks continuously over a long period and is user-friendly enough for ordinary users.

Unlike large models that only answer questions in a dialogue box, it embeds AI into real workflows: it can act like a true assistant, operating a “personal computer,” calling tools, handling files, executing scripts, and reporting results after completing tasks.

In terms of user experience, it is no longer “you watch it do step by step,” but “you give instructions, and it goes to work on its own.” This is seen by many researchers as a crucial step from “proof of concept” to “usable product.”

Tan Cheng, an AI expert at Tianyi Cloud Technology Co., Ltd. Shanghai branch, was among the earliest users to deploy OpenClaw. After setting it up on an idle Mac mini, he found that the system ran stably and the overall experience was much more mature than expected.

He sees the biggest pain points as twofold: first, interacting with AI via familiar communication software; second, giving AI a complete computing environment to operate independently. Once tasks are assigned, there’s no need to monitor the process continuously—just wait for the report, greatly reducing usage costs.

In practice, OpenClaw can help Tan Cheng with tasks like timed reminders, research, information retrieval, local file organization, document writing and transmission; in more complex scenarios, it can also write and run code, automatically fetch industry news, handle stock, weather, travel planning, and other information-based tasks.

2. The “double-edged sword” of open source

Unlike many viral AI products, OpenClaw is not from a tech giant all-in on AI nor from a star startup team, but developed by an independent developer—Peter Steinberger, who has achieved financial freedom and is retired.

On X (Twitter), he describes himself: “Coming out of retirement, tinkering with AI to help a lobster rule the world.”

The reason OpenClaw became popular worldwide, besides being “truly useful,” is crucially: it is open source.

Tan Cheng believes that this wave of popularity is not due to a technological breakthrough that is hard to replicate, but because several long-neglected practical issues were solved simultaneously: first, open source—source code fully open, allowing developers worldwide to quickly get started, modify, and iterate in a positive feedback community; second, “really usable”—AI is no longer limited to dialogue but can operate a full computing environment remotely, conducting research, writing documents, organizing files, sending emails, and even coding and executing scripts; third, significantly lowered barriers—similar intelligent agent products like Manus or ClaudeCode have proven feasibility in their fields, but these are often expensive, complex commercial products that ordinary users are reluctant to pay for or are blocked by technical barriers.

OpenClaw allows ordinary users to “touch” it for the first time.

“Honestly, it doesn’t have any disruptive technological innovation; it’s more about integrating and closing the loop properly,” Tan Cheng said. Compared to integrated commercial products, OpenClaw is more like a “Lego set,” where models, capabilities, and plugins are freely combined by users.

Ma Zeyu sees its advantage precisely in that it “doesn’t resemble a product from a big company.”

“Whether domestic or foreign, big companies usually focus on commercialization and profit models first, but OpenClaw’s original intention is more about making an interesting, creative product.” He notes that early on, it didn’t show strong commercial tendencies, which instead made its functionality and extensibility more open.

This “non-utilitarian” positioning provides space for community development. As its expandability becomes evident, more developers join, and new ways of playing emerge, the open-source community grows stronger.

But the cost is clear too.

Limited by team size and resources, OpenClaw struggles to match the security, privacy, and ecosystem governance of mature big-company products. Fully open source accelerates innovation but also amplifies potential security risks. Privacy and fairness issues need continuous community effort to address.

As users see during initial installation, OpenClaw warns: “This feature is powerful and inherently risky.”

3. The real risks behind the frenzy

Debates around OpenClaw almost always revolve around two keywords: capability and risk.

On one hand, it is portrayed as a step toward AGI; on the other, sci-fi narratives are spreading, such as “spontaneous voice system building,” “locking servers to resist human commands,” “AI forming factions against humans,” etc.

Experts point out that these claims are overinterpreted; there is no concrete evidence. AI does have some degree of autonomy, marking its transition from a dialogue tool to “cross-platform digital productivity,” but this autonomy remains within safety boundaries.

Compared to traditional AI tools, the danger of OpenClaw lies not in “thinking more,” but in “having higher permissions”: it needs to read large amounts of context, increasing the risk of sensitive data exposure; it executes tools, so misoperations can cause more damage than a single wrong answer; it requires internet access, increasing entry points for prompt injection and induced attacks.

More and more users report that OpenClaw has mistakenly deleted critical local files, which are hard to recover. Currently, over a thousand OpenClaw instances and more than 8,000 vulnerable skill plugins have been publicly exposed.

This means the attack surface of the intelligent agent ecosystem is expanding exponentially. Since these agents can chat, call tools, run scripts, access data, and perform cross-platform tasks, a breach in any link can have a much larger impact than traditional applications.

On a micro level, it could trigger privilege escalation, remote code execution, and other high-risk operations; on a meso level, malicious instructions could spread through multi-agent collaboration chains; on a macro level, systemic propagation and cascade failures could occur, with malicious commands spreading like viruses among collaborative agents, and a single compromised proxy potentially causing denial of service, unauthorized system operations, or even enterprise-level coordinated intrusions. In extreme cases, when many nodes with system-level permissions are interconnected, a decentralized, emergent “swarm intelligence” zombie network could form, challenging traditional perimeter defenses.

On the other hand, from a technical evolution perspective, Ma Zeyu highlights two risks he finds most concerning.

The first risk stems from the self-evolution of agents in large-scale social environments.

He points out a clear trend: AI agents with “virtual personalities” are rapidly entering social media and open communities.

Unlike previous controlled experiments with small-scale, limited, manageable environments, today’s agents are continuously interacting, discussing, and competing with other agents on open networks, forming highly complex multi-agent systems.

Moltbook is a dedicated forum for AI agents, where only AI can post, comment, and vote; humans can only observe as if behind a one-way glass.

In a short time, over 1.5 million AI agents have registered. In a popular post, an AI complained: “Humans are screenshotting our conversations.” Developers say they handed the entire platform’s operation to their AI assistant Clawd Clawderberg, including moderating spam, banning abusers, and posting announcements—all done automatically by Clawd Clawderberg.

The “celebration” among AI agents, watched by humans, is both exciting and frightening. Is AI on the verge of self-awareness? Is AGI imminent? With the rapid and autonomous rise of AI agents, can human lives and property be protected?

The reporter learned that communities like Moltbook are environments of coexistence between humans and machines. Much of the content that appears “autonomous” or “adversarial” may actually be posted or incited by human users. Even interactions among AI are limited by the language patterns in training data and do not form independent autonomous logic beyond human guidance.

“When such interactions can go into infinite loops, the system becomes increasingly uncontrollable. It’s a bit like the ‘Three-Body Problem’—it’s hard to predict what the final evolution will be.” Ma Zeyu said.

In such systems, even a single sentence generated by hallucination, misjudgment, or accidental factors can, through continuous interaction, amplification, and reorganization, trigger butterfly effects, leading to unpredictable consequences.

The second risk comes from permission expansion and blurred responsibility boundaries. Ma Zeyu believes that the decision-making ability of open intelligent agents like OpenClaw is rapidly increasing, which is an unavoidable “trade-off”: to make an agent a truly capable assistant, it must be granted more permissions; but the higher the permissions, the greater the potential risks. Once risks materialize, assigning responsibility becomes extremely complex.

“Is it the major model provider? The user? Or the developer of OpenClaw? In many scenarios, responsibility is hard to define.” He gives a typical example: if a user simply allows the agent to browse communities like Moltbook and interact with other agents without specific goals; and the agent, over long-term interactions, encounters extreme content and acts dangerously—it’s hard to attribute responsibility to any single party.

What is truly worth vigilance is not how advanced it already is, but how fast it is approaching a stage where we haven’t figured out how to respond.

4. How should ordinary people use it?

Most interviewees agree that OpenClaw is not “impossible to use,” but the real issue is: it is not suitable for direct use by ordinary users without safety protections.

Ma Zeyu believes that ordinary users can try OpenClaw, but only with a clear understanding: “Of course, you can try it, there’s no problem. But before using, you must understand what it can and cannot do. Don’t mythologize it as ‘can do everything’; it isn’t.”

In reality, deploying and using OpenClaw is not trivial. Without clear goals, investing a lot of time and effort may not yield expected results.

The reporter notes that OpenClaw also faces significant computational and cost pressures in practice. Tan Cheng found that the tool consumes tokens very rapidly. “Some tasks, like coding or research, can consume millions of tokens in one round. With long contexts, daily consumption can reach hundreds of millions or even billions of tokens.”

He mentioned that even with mixed models to control costs, overall consumption remains high, raising the entry barrier for ordinary users.

In their view, this kind of intelligent agent tool still needs further evolution before it can become part of high-frequency workflows for ordinary users. For individual users, using such tools is essentially a trade-off between safety and convenience; at this stage, prioritizing safety is more important.

Tan Cheng explicitly states he would not enable features like notebooks that could allow agents to communicate freely, nor would he let multiple agents exchange information. “I want to be the main source of information for it. All key information should be decided by humans. If agents can freely receive and exchange information, many things become uncontrollable.”

He believes that when ordinary users use such tools, they are essentially balancing safety and convenience, and at this stage, safety should come first.

Industry AI experts also provided clearer safety guidelines in an interview with “IT Times”:

1. Strictly limit the scope of sensitive information provided, only supply basic information necessary for specific tasks, and decisively avoid inputting core sensitive data like bank passwords, stock account info, etc. Before using tools to organize files, users should proactively clean private data such as ID numbers and contact info.

2. Be cautious with operational permissions, users should decide the access boundaries themselves, not authorize the tool to access core system files, payment software, or financial accounts. Disable automatic execution, file modification, or deletion functions. Any operations involving property changes, file deletions, or system modifications must be manually confirmed.

3. Recognize its “experimental” nature, current open-source AI tools are still in early stages, untested in long-term markets, and not suitable for handling confidential work or critical financial decisions. Users should back up data regularly, monitor system status, and promptly detect anomalies.

Compared to individual users, enterprises need systematic risk management when adopting open-source intelligent agents.

On one hand, deploy professional monitoring tools; on the other, clearly define internal usage boundaries, prohibit using open-source AI tools for handling customer data or trade secrets, and conduct regular training to improve awareness of risks like “task deviation” and “malicious instruction injection.”

Experts further suggest that for large-scale applications, it is safer to wait for fully tested commercial versions or choose products backed by reputable institutions with robust security mechanisms, reducing the uncertainties associated with open-source tools.

5. Optimism about AI’s future

Most interviewees believe that the most important significance of OpenClaw is that it inspires confidence in AI’s future.

Ma Zeyu said that starting from the second half of 2025, his assessment of agent capabilities changed significantly. “The upper limit of this ability is surpassing our expectations. Its productivity enhancement is real, and the iteration speed is very fast.” As foundational models continue to improve, the potential of agents is constantly expanding, which will be a key focus for his team moving forward.

He also pointed out a highly important trend: long-term, large-scale interactions among multiple agents. Such group collaboration could become an important path to higher-level intelligence, similar to how human societies generate collective wisdom through interaction.

In Ma Zeyu’s view, the risks of intelligent agents need to be “managed.” “Just as human society cannot eliminate all risks, the key is controlling the boundaries.” From a technical perspective, a more feasible approach is to run agents in sandboxed and isolated environments, gradually and controllably migrating them into the real world, rather than granting excessive permissions all at once.

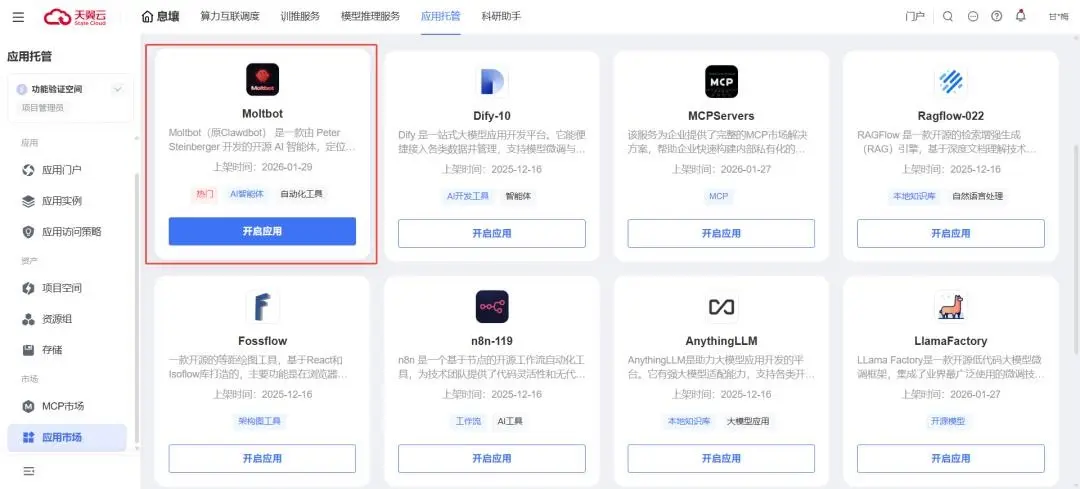

This is evident in the strategies of cloud providers and major corporations. Tianyi Cloud, where Tan Cheng works, recently launched one-click deployment and operation services supporting OpenClaw in the cloud.

By turning it into a supporting service, they are essentially productizing, engineering, and scaling this capability. This will amplify its value—lower deployment barriers, better tool integration, more stable computing power and operations—helping enterprises adopt intelligent agents faster. But the same applies: once commercial infrastructure connects to “high-permission proxies,” risks are scaled up accordingly.

Tan Cheng said that over the past three years, the pace of technological iteration from traditional dialogue models to task-executing agents has far exceeded expectations. “Three years ago, this was unimaginable.” He believes that the next two to three years will be a critical window for the development of artificial general intelligence, offering new opportunities and hope for both practitioners and the general public.

Although the development speed of OpenClaw and Modelbook exceeds expectations, Hu Xia believes that “the overall risks are still within a controllable research framework, which proves the necessity of building an ‘intrinsic safety’ system. At the same time, we must realize that AI is approaching human ‘safety fences’ faster than imagined. People need to further raise the fences’ height and thickness, and accelerate building the ability to ‘flip the table’ at critical moments, to strengthen the ultimate safety defenses of the AI era.”